Find Out Who's Talking About Deepseek And Why Try to be Concerned

DeepSeek AI is up 9.64% within the last 24 hours. DeepSeek v3 trained on 2,788,000 H800 GPU hours at an estimated cost of $5,576,000. For comparability, Meta AI's Llama 3.1 405B (smaller than DeepSeek v3's 685B parameters) educated on 11x that - 30,840,000 GPU hours, additionally on 15 trillion tokens. Meta hiread Clara Shih, former CEO of Salesforce AI. Nvidia founder and CEO Jensen Huang stated the market acquired it wrong relating to Free Deepseek Online chat’s technological advancements and its potential to negatively influence the chipmaker’s enterprise. Choosing DeepSeek Windows comes with a number of advantages. Its compatibility with multiple Windows versions ensures a seamless experience no matter your device’s specifications. While these excessive-precision parts incur some memory overheads, their impact might be minimized through efficient sharding across a number of DP ranks in our distributed training system. While we’re nonetheless a good distance from true artificial normal intelligence, seeing a machine suppose in this manner reveals how much progress has been made. Researchers from: BAAI printed a paper exploring a novel means to guage LLMs: debate. V3.pdf (through) The DeepSeek v3 paper (and mannequin card) are out, after yesterday's mysterious release of the undocumented model weights.

DeepSeek AI is up 9.64% within the last 24 hours. DeepSeek v3 trained on 2,788,000 H800 GPU hours at an estimated cost of $5,576,000. For comparability, Meta AI's Llama 3.1 405B (smaller than DeepSeek v3's 685B parameters) educated on 11x that - 30,840,000 GPU hours, additionally on 15 trillion tokens. Meta hiread Clara Shih, former CEO of Salesforce AI. Nvidia founder and CEO Jensen Huang stated the market acquired it wrong relating to Free Deepseek Online chat’s technological advancements and its potential to negatively influence the chipmaker’s enterprise. Choosing DeepSeek Windows comes with a number of advantages. Its compatibility with multiple Windows versions ensures a seamless experience no matter your device’s specifications. While these excessive-precision parts incur some memory overheads, their impact might be minimized through efficient sharding across a number of DP ranks in our distributed training system. While we’re nonetheless a good distance from true artificial normal intelligence, seeing a machine suppose in this manner reveals how much progress has been made. Researchers from: BAAI printed a paper exploring a novel means to guage LLMs: debate. V3.pdf (through) The DeepSeek v3 paper (and mannequin card) are out, after yesterday's mysterious release of the undocumented model weights.

Domestically, DeepSeek fashions supply performance for a low worth, and have grow to be the catalyst for China's AI mannequin worth warfare. DeepSeek V3, with its open-source nature, efficiency, and sturdy performance in particular domains, provides a compelling different to closed-source fashions like ChatGPT. In case your machine doesn’t support these LLM’s nicely (unless you have an M1 and above, you’re in this class), then there's the following different resolution I’ve found. Note: Unlike copilot, we’ll concentrate on domestically working LLM’s. From 1 and 2, it's best to now have a hosted LLM mannequin working. Because it revealed its analysis, different mannequin corporations will be taught from it, and adapt. "The bottom line is the US outperformance has been driven by tech and the lead that US companies have in AI," Lerner stated. Given the above best practices on how to supply the model its context, and the prompt engineering strategies that the authors steered have positive outcomes on end result.

Interact with the chatbot as you would with an individual, present related context, and work step-by-step to achieve the very best outcomes. For reference, this stage of capability is presupposed to require clusters of closer to 16K GPUs, the ones being brought up at this time are extra around 100K GPUs. One of the quickest workstations on the market, the Lenovo ThinkStation PX boasts twin 4th Gen Intel Xeon Scalable CPUs and might run up to four NVIDIA RTX 6000 Ada Gen GPUs. Are you able to comprehend the anguish an ant feels when its queen dies? The DeepSeek-R1 model in Amazon Bedrock Marketplace can solely be used with Bedrock’s ApplyGuardrail API to judge person inputs and mannequin responses for custom and third-social gathering FMs obtainable outside of Amazon Bedrock. The purpose of this publish is to deep-dive into LLMs which might be specialized in code era tasks and see if we are able to use them to jot down code. However, the o1 model from OpenAI is designed for advanced reasoning and excels in tasks that require deeper pondering and downside-fixing. The model was tested throughout a number of of probably the most difficult math and programming benchmarks, displaying main advances in deep reasoning.

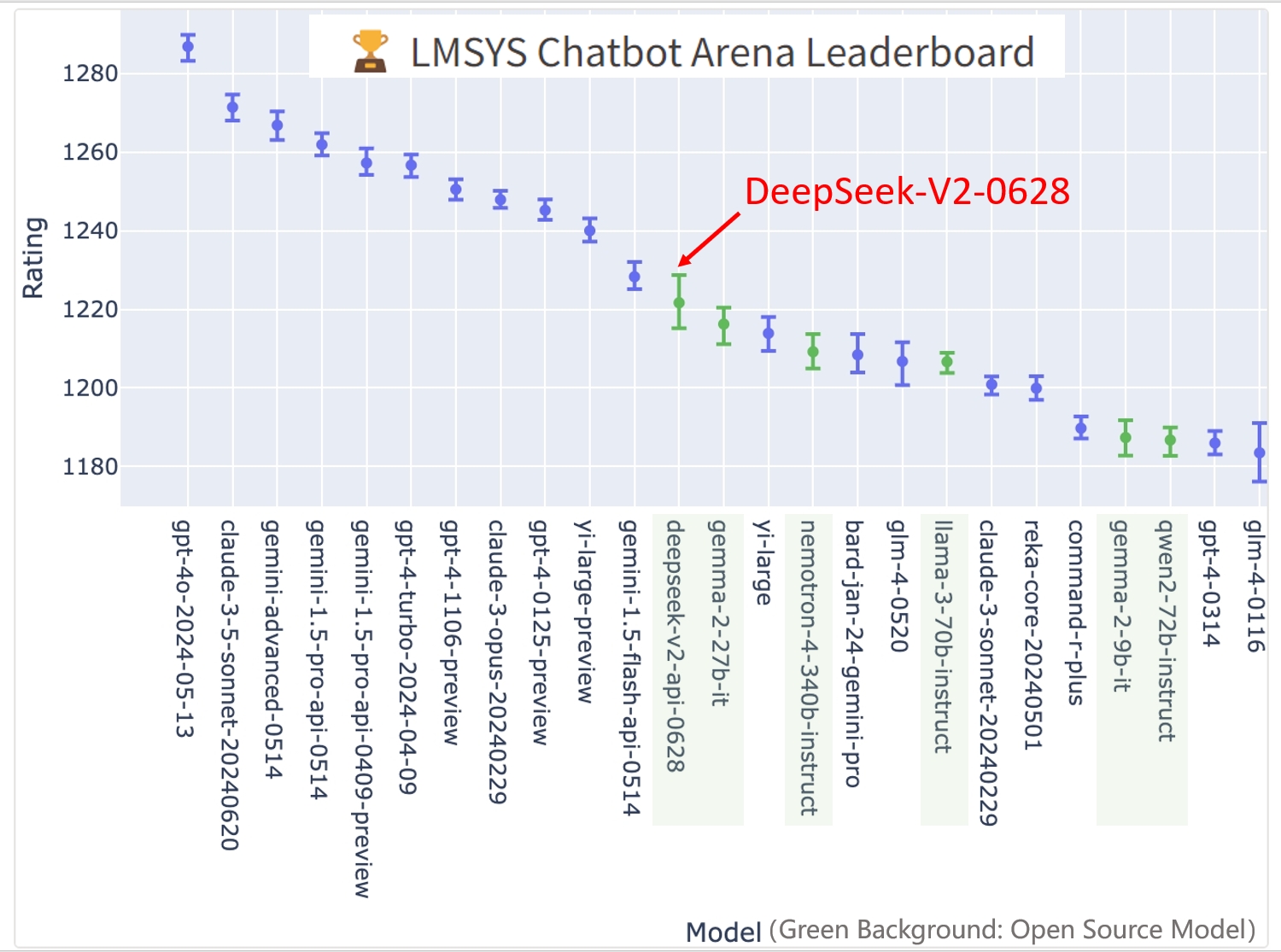

A major differentiator for DeepSeek is its potential to run its personal information centers, in contrast to most other AI startups that depend on external cloud providers. Ollama is basically, docker for LLM models and allows us to quickly run numerous LLM’s and host them over standard completion APIs domestically. Watch Run DeepSeek R1 Locally With LMStudio on YouTube for a step-by-step quick information. DeepSeek made it to primary in the App Store, simply highlighting how Claude, in distinction, hasn’t gotten any traction outside of San Francisco. Wordware raised $30 million for its AI app development platform. Agentic platform H launched its first product. Physical AI platform BrightAI announced that it has reached $80 million in income. DeepSeek v3 benchmarks comparably to Claude 3.5 Sonnet, indicating that it is now potential to prepare a frontier-class model (no less than for the 2024 model of the frontier) for less than $6 million! QwQ options a 32K context window, outperforming o1-mini and competing with o1-preview on key math and reasoning benchmarks. The benchmarks are fairly spectacular, but in my view they really only show that DeepSeek-R1 is unquestionably a reasoning mannequin (i.e. the additional compute it’s spending at take a look at time is actually making it smarter).

A major differentiator for DeepSeek is its potential to run its personal information centers, in contrast to most other AI startups that depend on external cloud providers. Ollama is basically, docker for LLM models and allows us to quickly run numerous LLM’s and host them over standard completion APIs domestically. Watch Run DeepSeek R1 Locally With LMStudio on YouTube for a step-by-step quick information. DeepSeek made it to primary in the App Store, simply highlighting how Claude, in distinction, hasn’t gotten any traction outside of San Francisco. Wordware raised $30 million for its AI app development platform. Agentic platform H launched its first product. Physical AI platform BrightAI announced that it has reached $80 million in income. DeepSeek v3 benchmarks comparably to Claude 3.5 Sonnet, indicating that it is now potential to prepare a frontier-class model (no less than for the 2024 model of the frontier) for less than $6 million! QwQ options a 32K context window, outperforming o1-mini and competing with o1-preview on key math and reasoning benchmarks. The benchmarks are fairly spectacular, but in my view they really only show that DeepSeek-R1 is unquestionably a reasoning mannequin (i.e. the additional compute it’s spending at take a look at time is actually making it smarter).

Should you loved this short article as well as you wish to receive more details about Deepseek AI Online chat generously pay a visit to the web site.